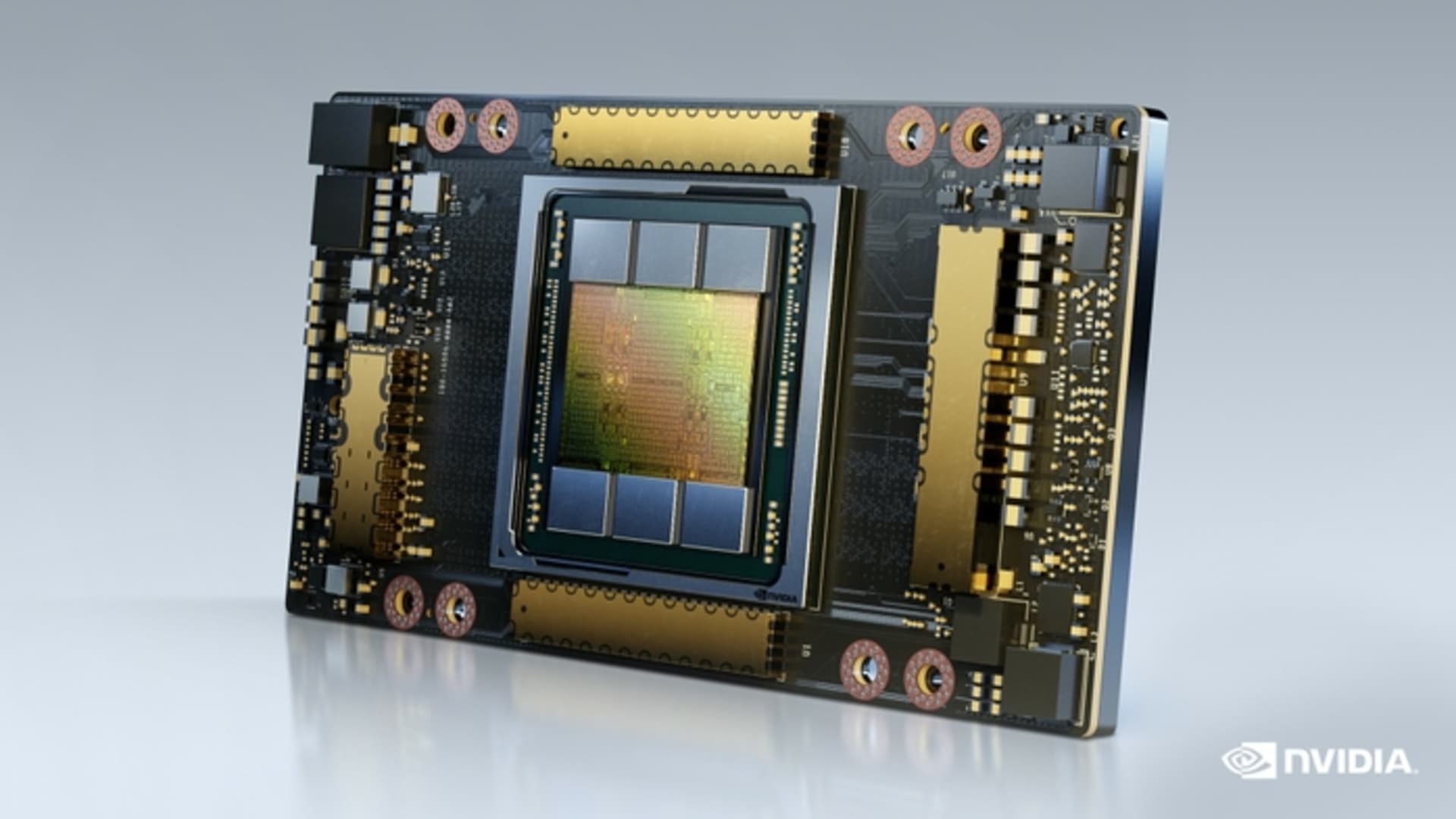

Discovering GPU-friendly Deep Neural Networks with Unified Neural Architecture Search | NVIDIA Technical Blog

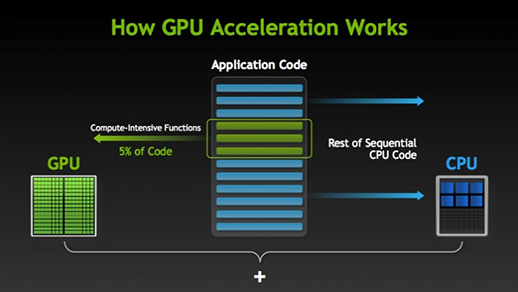

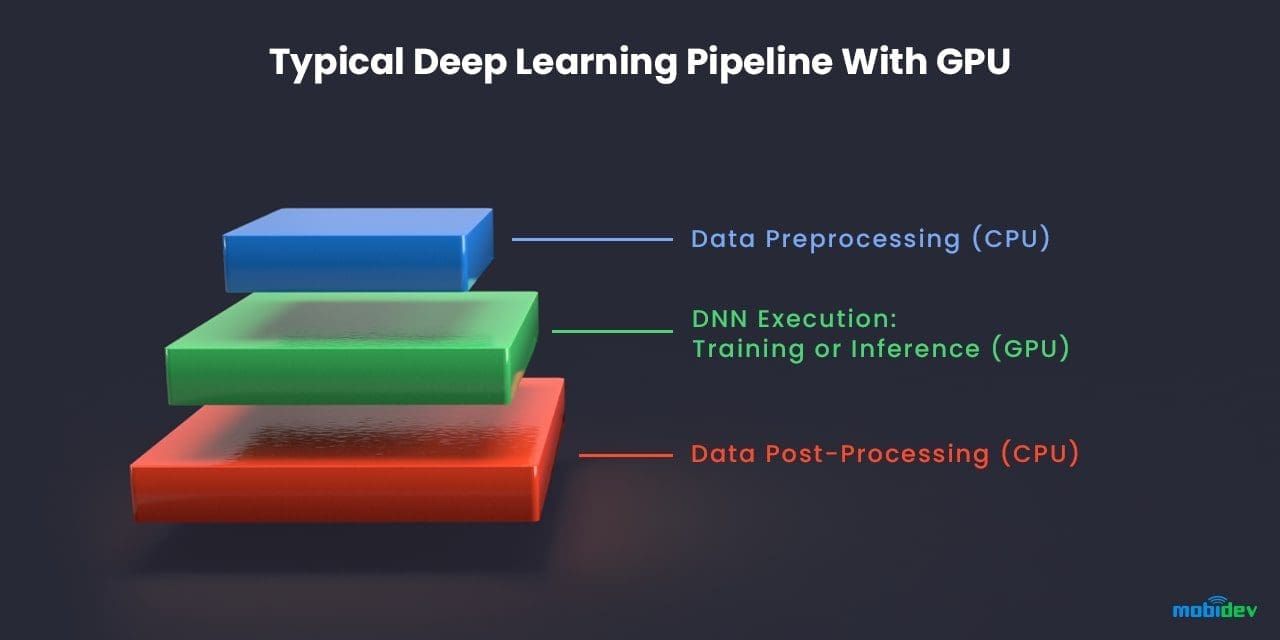

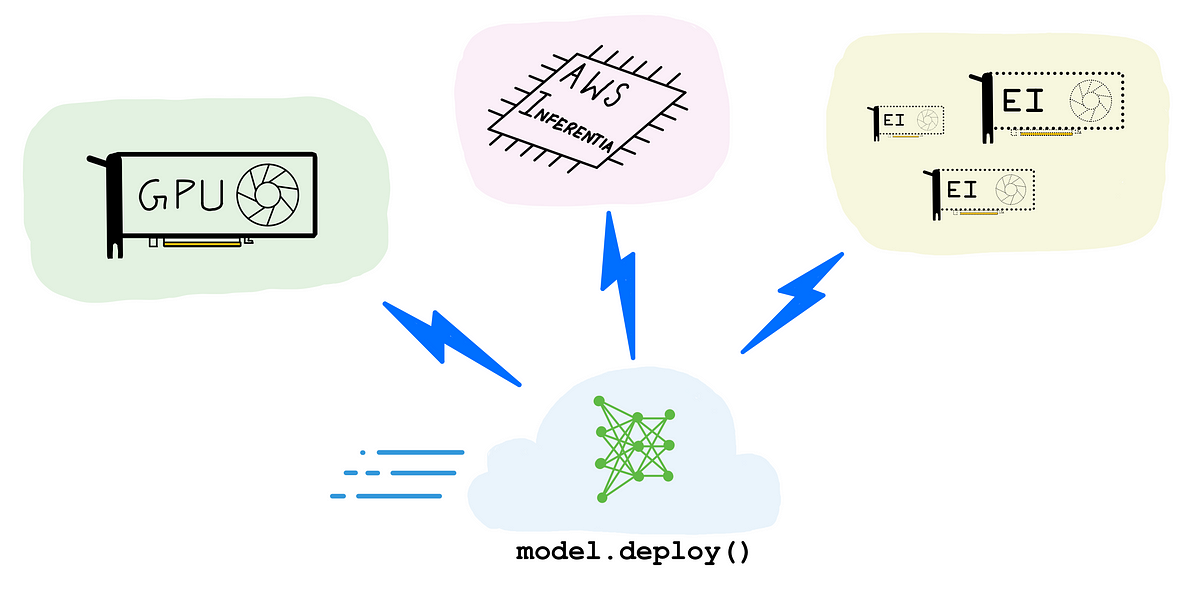

A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference | by Shashank Prasanna | Towards Data Science

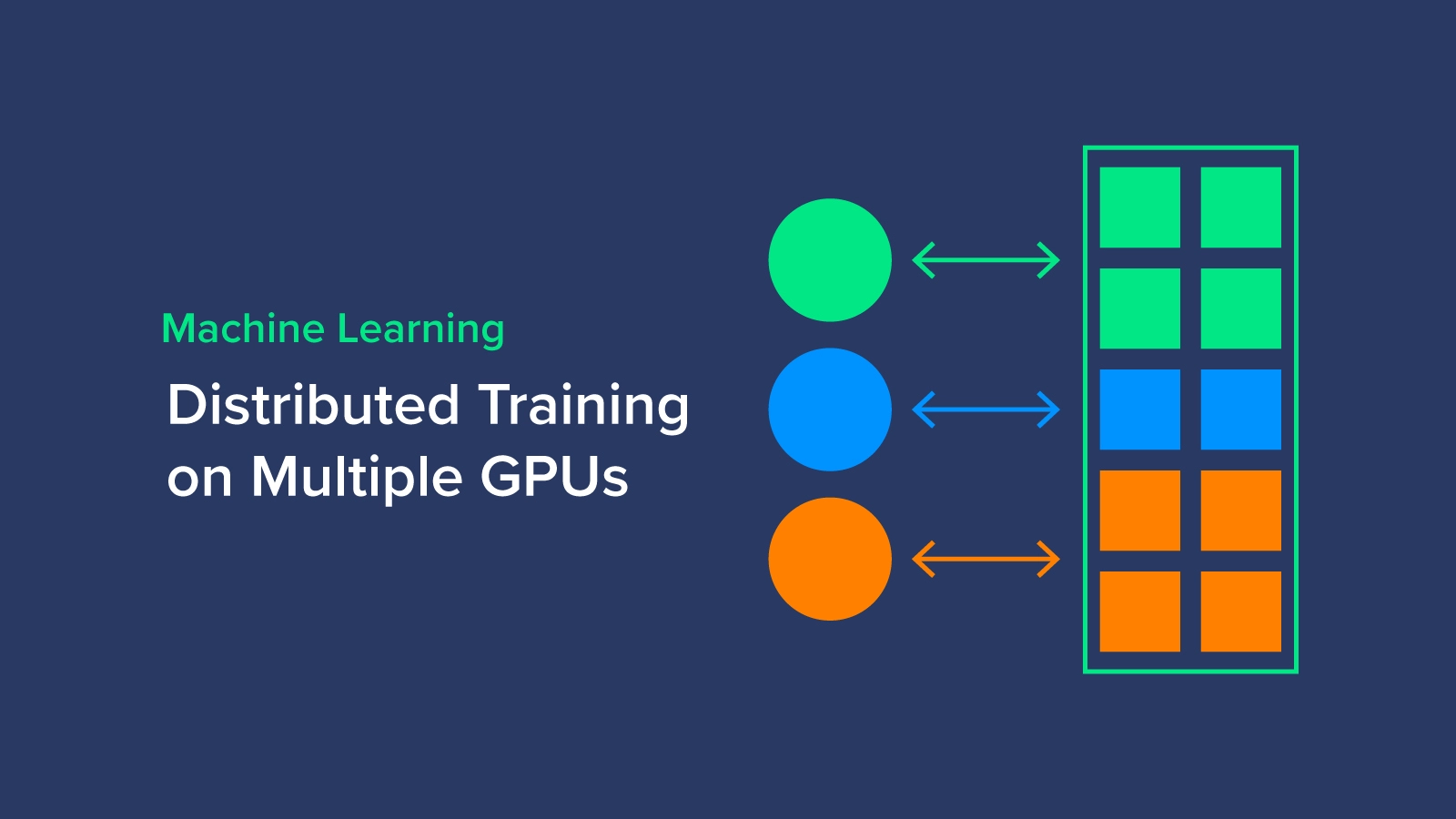

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science